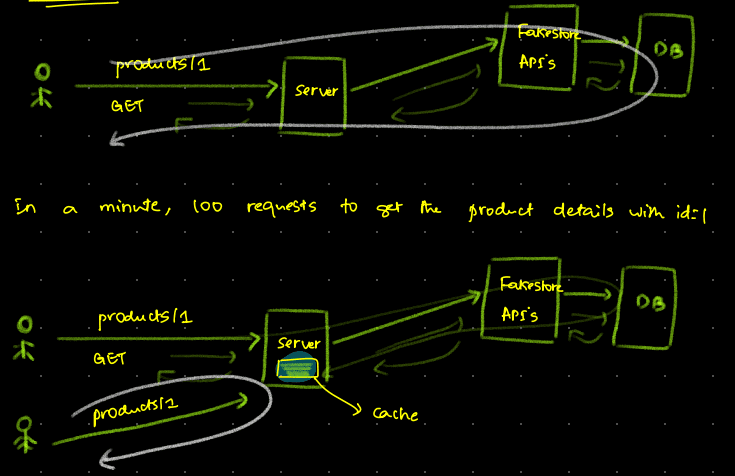

Let’s assume we have a product service and someone calls the product API with id 1. Initially, this request hits our server, which then redirects the request to the FakeStore APIs. The FakeStore API fetches the data from its database and returns it to our server, which then sends the data back to the user.

This is illustrated in the first visual. However, there is a significant issue: if we receive 100 requests per minute to get the product details with id 1, we need to handle 100 requests to the FakeStore API. This will delay the response time and place extra load on our server.

A better solution would be to implement a caching mechanism on our server. When a request for a product with id 1 is made, our server fetches the data and caches it. For subsequent requests for the same product id, the server can return the cached data. This reduces the number of requests to the FakeStore API and its database by almost 99%, thereby improving response times and reducing server load.

Issue in current cache solutions ->

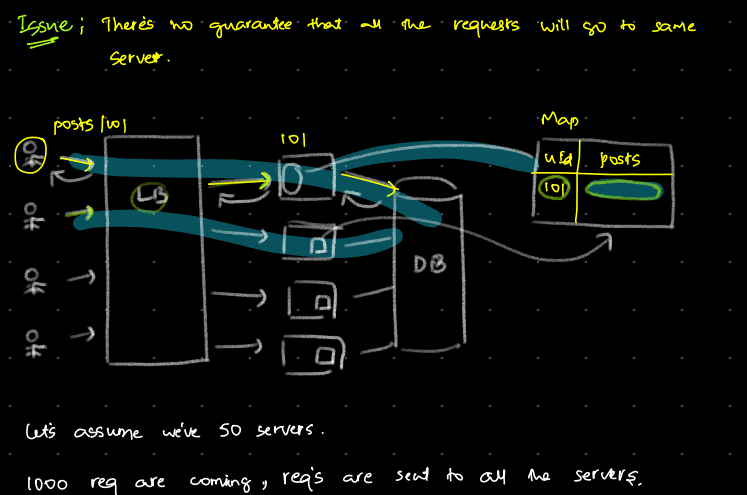

There is a significant issue with the above solution. If we implement caching on individual servers and we have multiple servers, there is no guarantee that a request will always come to the same server. Requests can be routed to any of the servers.

Let’s assume we have 50 servers and 1000 requests coming in, distributed across all servers. In this scenario, 50 requests might be slower because they hit servers that don’t have the data cached yet. While this is somewhat acceptable, there is a better solution: using a global cache.