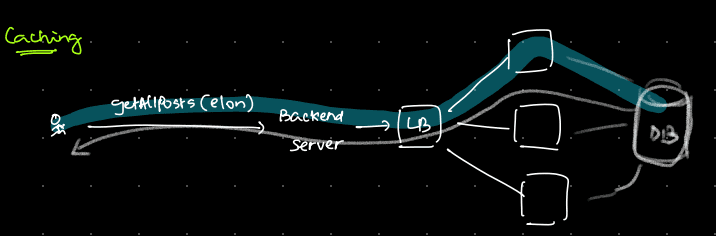

Like illustrated below, if we want to get all posts of Elon Musk on Twitter, first we will call the backend server. It will call the load balancer, and there are multiple servers. The load balancer assigns one of them, and then it will hit the database (DB). The request comes back to us.

Can we do something better?

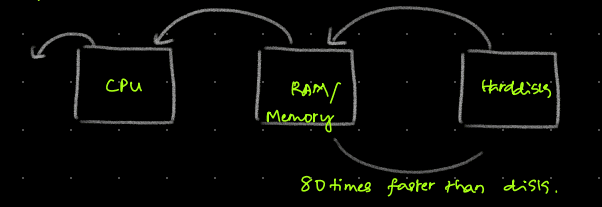

Let’s assume we have a calculation like 171×3 + 171×3, and we have to solve it. First, we will calculate 171×3, which equals 513, and we will keep it in our memory. Then, we will add this with another 513, which comes to 1026. Because we know 171×3 is again 513, we will take it from our memory and directly add it instead of recalculating it. This is called caching. It’s the same like we do in data structures by using Dynamic Programming (DP). We will use the cached value if it is already present in the structure.

How cache gets updated

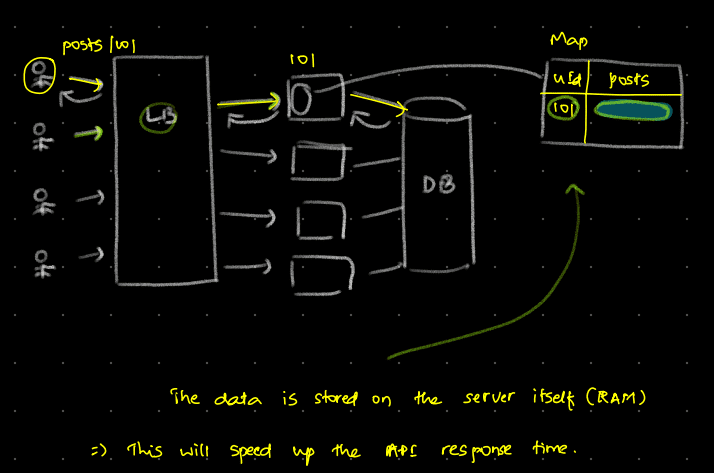

As illustrated below, when multiple users request a post of Elon Musk, the request first goes to the load balancer. The load balancer then directs it to one server, which hits the database (DB). When the response comes back, it is saved in a map-like structure using the same user ID (uid) and post data. If the same request comes again within 5 minutes, the server will return the cached data. It’s unlikely that Elon will post something new within 5 minutes, and even if he does, other users will get the new post with a slight delay, which is acceptable. By storing the response data in the server’s RAM, the response time is sped up.

Advantages of Cache:

– Reduces database load

– Speeds up API response times